When it comes to data, there are few companies that have as much data stored on their servers as Google. According to at least one not so recent estimate, Google could be holding as much as 15 exabytes on their servers. That’s 15 million terrabytes of data which would be the equivalent of 30 million personal computers.

When it comes to data, there are few companies that have as much data stored on their servers as Google. According to at least one not so recent estimate, Google could be holding as much as 15 exabytes on their servers. That’s 15 million terrabytes of data which would be the equivalent of 30 million personal computers.

When it comes to data, there are few companies that have as much data stored on their servers as Google. According to at least one not so recent estimate, Google could be holding as much as 15 exabytes on their servers. That’s 15 million terrabytes of data which would be the equivalent of 30 million personal computers. Storing and managing such a humongous volume of data is no easy task and how Google handles this data is a lesson for anybody who deals with cloud and big data.

When it comes to data, there are few companies that have as much data stored on their servers as Google. According to at least one not so recent estimate, Google could be holding as much as 15 exabytes on their servers. That’s 15 million terrabytes of data which would be the equivalent of 30 million personal computers. Storing and managing such a humongous volume of data is no easy task and how Google handles this data is a lesson for anybody who deals with cloud and big data.

Designated Servers

Handling servers at that volume is quite similar to how you handle data within a database. A typical database contains tables that perform specific tasks. For instance, the user database only contains information relating to users while payment database contains the billing details of these same users. The reason tables are built this way is in order to make it easy to retrieve specific details in quick time. Querying for billing details from a table that contains only this information is easier than performing the same task on a master table that is much larger.

On a similar note, Google has servers designated to perform specific tasks. They have Index servers that contain the list of document IDs that contain the user’s query, Ad servers that manage ads on the search results pages, Data-Gathering servers that send out bots to crawl the web and even Spelling servers that help correct the typos present in a user’s search query. Even when it comes to populating these servers, it has been found that Google tries to prioritize organized and structured content over unstructured ones. One study found that it takes Google 14 minutes on an average to crawl content from sitemaps. In comparison, it took the same spiders from the Data-Gathering servers nearly 1375 minutes to crawl content which did not contain sitemaps.

The benefits from using such designated servers and structured data processing systems is two-fold – Firstly, it helps the search engine save time by reaching out to specific servers to retrieve specific components that make up the final results page. Also, it helps run these various processes in parallel and thereby further reduces the time it takes to build the final results page.

Lesson: Classify your servers to perform specific data processing components. It can vastly speed up your processes

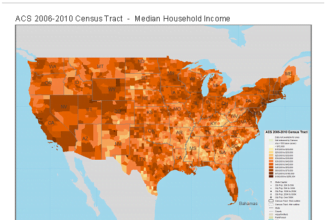

Data Storage and Retention

The Google search engine business thrives on data and so how they store, manage and retain data is a great lesson for any technology company. The first mantra when it comes to storage and retention is that not all data is made equal. There are some data that is accessed quite frequently, others not so frequently while the rest may not be relevant any longer. Cached versions of dead web pages (that have been removed or edited) have no reason to be stored on Google servers and may be removed from its servers. At the same time, cached pages of an inconsequential news item from the 1990s doesn’t get accessed frequently today. While such contents cannot be deleted, Google puts such data in “cold storage” which are essentially cheaper servers that keep data in compressed form that makes data retrieval slower.

Lesson: Prioritize your data and decide what to do with them – remove them, put them on cold storage or keep them in your active servers.

Data Loss Prevention

When we are talking about indexing several petabytes of information each day, then the threat to loss of such data is extremely real; especially if you are Google and your business depends on data. According to a paper published on the Google File System (GFS), the company duplicates each data indexed as many as three times. What this means is that if there are 20 petabytes of data indexed each day, Google will need to store as much as 60 petabytes of data. This is then a tripling of your data infrastructure investments and while it is a massive expense, there are no two ways about it.

Lesson: The only way to prevent data loss is by replicating content. It costs money, but is a worthwhile investment that needs to catered to.