This past week at Predictive Analytics World / Toronto (PAW) has been a great time for connecting with thought leaders and practitioners in the field. Sometimes there are unexpected pleasures as well, which is certainly the case this time. One of the exhibitors for the eMetrics conference, co-locating with PAW at the venue, was Unilytics, a web analytics company.

This past week at Predictive Analytics World / Toronto (PAW) has been a great time for connecting with thought leaders and practitioners in the field. Sometimes there are unexpected pleasures as well, which is certainly the case this time. One of the exhibitors for the eMetrics conference, co-locating with PAW at the venue, was Unilytics, a web analytics company. At their booth there was a cylindrical container filled with crumpled dollar bills with a sign soliciting predictions of how many dollar bills were in the container (the winner getting all the dollars). After watching the announcement of the winner, who guessed $352, only $10 off from the actual $362, I thought this would be the perfect opportunity for another Wisdom of Crowds test,just like the one conducted 9 months ago and blogged on here.

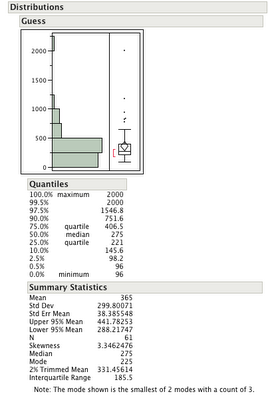

Two Unilytics employees at the booth, Gary Panchoo and Keith MacDonald, were kind enough to indulge me and my request to compute the average of all the guesses. John Elder was also there, licking his wounds from finished a close second as his guess, $374 was off by $12, a mere $2 away from the winning entry! The results of the analysis are here (summary statistics created by JMP Pro 10 for the mac). In summary, the results are as follows:

Dollar Bill Guess Scores

| Method | Guess Value | Error |

|---|---|---|

| Actual | 362 | |

| Ensemble/Average (N=61) | 365 | 3 |

| Winning Guess (person) | 352 | 10 |

| John Elder | 374 | 12 |

| Guess without outlier (2000), 3rd place | 338 | 24 |

| Median, 19th place | 275 | 87 |

So once again, the average of the entries (the “Crowds” answer) beat the single best entry. What is fascinating to me about this is not that the average won (though this in of itself isn’t terribly surprising), but rather howit won. Summary statistics are below. Note that the Median is 275, far below the mean. Not too how skewed the distribution of guesses are (skew = 3.35). The fact that the guesses are skewed positively for a relatively small answer (362) isn’t a surprise, but the amount of skew is a bit surprising to me.

What these statistics tell us is that while the mean value of the guesses would have been the winner, a more robust statistic would not, meaning that the skew was critical in obtaining a good guess. Or put another way, people more often than not under-guessed by quite a bit (the median is off by 87). Or put a third way, the outlier (2000) which one might naturally want to discount because it was a crazy guess was instrumental to the average being correct. In the prior post on this from July 2011, I trimmed the guesses, removing the “crazy” ones. So when should we remove the wild guesses and when shouldn’t we? (If I had removed the 2000, the “average” still would have finished 3rd). I have no answer to when the guesses are not reasonable, but wasn’t inclined to remove the 2000 initially here. Full stats from JMP are below, with the histogram showing the amount of skew that exists in this data.

Distribution of Dollar Bill Guesses – Built with JMP

Summary Statistics

| Statistic | Guess Value |

|---|---|

| Mean | 365 |

| Std Dev | 299.80071 |

| Std Err Mean | 38.385548 |

| Upper 95% Mean | 441.78253 |

| Lower 95% Mean | 288.21747 |

| N | 61 |

| Skewness | 3.3462476 |

| Median | 275 |

| Mode | 225 |

| 2% Trimmed Mean | 331.45614 |

| Interquartile Range | 185.5 |

Note: The mode shown is the smallest of 2 modes with a count of 3.

Quantiles

| Quantile | Guess Value | |

|---|---|---|

| 100.0% | maximum | 2000 |

| 99.5% | 2000 | |

| 97.5% | 1546.8 | |

| 90.0% | 751.6 | |

| 75.0% | quartile | 406.5 |

| 50.0% | median | 275 |

| 25.0% | quartile | 221 |

| 10.0% | 145.6 | |

| 2.5% | 98.2 | |

| 0.5% | 96 | |

| 0.0% | minimum | 96 |