The data says that Hadoop isn’t going to replace your enterprise data warehouse.

Hadoop Adds Rather Than Subtracts

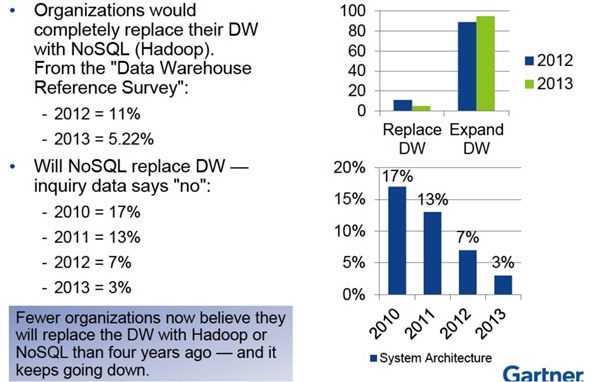

According to Gartner’s latest surveys, the number of CIOs that think that Hadoop will replace their existing analytics infrastructure has plummeted over the last few years, and is now down to just 3%.

The data says that Hadoop isn’t going to replace your enterprise data warehouse.

Hadoop Adds Rather Than Subtracts

According to Gartner’s latest surveys, the number of CIOs that think that Hadoop will replace their existing analytics infrastructure has plummeted over the last few years, and is now down to just 3%.

Are these CIOs deluded? No, the fact that the numbers continue to drop are a good indication that they understand the technology — and its limitations.

Note this DOESN’T mean that enterprises don’t see value of Hadoop, or aren’t going to massively increase their investment in it. Quite the opposite. At this week’s Hadoop Summit in Amsterdam, I heard from large enterprises such as Deutsche Telekom, Centrica, EDF, HSBC, and ING bank that are making big strategic bets on Hadoop as a core data framework for the future.

But like the survey respondents, they believe that Hadoop ultimately “adds more than subtracts.” For example, Alasdair Anderson of HSBC gave great, concrete example of the cost and flexibility advantages of Hadoop in his presentation “Hadoop-economics, the Invisible Hand of Big Data.” But he also included a “Health Warning” to use Hadoop where it makes sense:

“There’s no relationship between the EDW and Hadoop right now — they are going to be complimentary. It’s NOT about rip and replace: we’re not going to get rid of RDBMS or MPP, but instead use the right tool for right job — and that will very much be driven by price.”

Ralph Kimball, one of the key data warehousing pioneers, echoed these sentiments in a recent CloudEra webinar. In a must-see presentation designed to explain Hadoop to data warehouse experts, he positively gushed over the new opportunities, but here’s what he had to say in the Q&A section:

“Here’s a question that made me laugh a little bit, but it’s a serious question: ‘Well does this mean that relational databases are going to die?’. I think that there was a sense, three or four years ago, that maybe this was all a giant zero sum game between Hadoop and relational databases, and that has simply gone away. Everyone has now realized that there’s a huge legacy value in relational databases for the purposes they are used for. Not only transaction processing, but for all the very focused, index-oriented queries on that kind of data, and that will continue in a very robust way forever. Hadoop, therefore, will present this alternative kind of environment for different types of analysis for different kinds of data, and the two of them will coexist. And they will call each other. There may be points at which the business user isn’t actually quite sure which one of them they are touching at any point of time.”

Embrace Technology Change Outside of Hadoop, Too

So Hadoop isn’t going to “replace” EDW. But how about that notion that EDWs are legacy, to be maintained but not used for new analytic applications? Will EDWs become “the mainframes of 21st century” as one Hadoop Summit attendee put it?

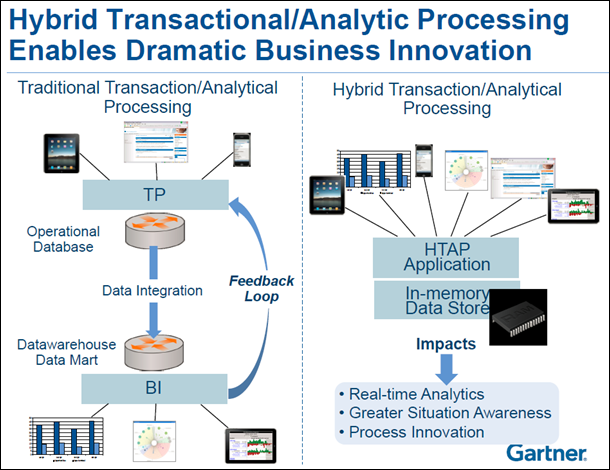

No. Ignoring the many advantages of Hadoop would be dumb. But it would be just as dumb to ignore the other revolutionary technology breakthroughs in the DW space. In particular, new in-memory processing opportunities have created a brand-new category that Gartner calls “hybrid transactional/analytic platforms” (HTAP):

“Hybrid transaction/analytical processing will empower application leaders to innovate via greater situation awareness and improved business agility. This will entail an upheaval in the established architectures, technologies and skills driven by use of in-memory computing technologies as enablers.”

HTAP isn’t just a way of speeding up existing applications and analytics. The simplicity of the architecture (data is stored just once), and the flexibility of the platform means it has a lower total cost of ownership that traditional disk-based platforms. This means it’s also rapidly becoming the default platform for new business application deployments, and the heart of new “real-time” applications.

But isn’t Hadoop going to do all that?

No. This was a gratingly prevalent view at at Hadoop Summit, but it’s firmly in the “wishful thinking” camp.

Yes, there are projects to make in-memory and ACID compliance part of the Hadoop Framework. Storm and Flume mean you can start using Hadoop with streaming data. YARN may turn into a “generic app provisioning system via Linux containers.”

Does this mean that you’ll be able to do more with Hadoop in the future? Yes. Is it going to be easier to make applications? Yes. Is forty years of business process and data warehousing technology and expertise going be obsolete any time soon? No!

One small example, from Alan Gates, one of the co-founders of Hortonworks, presenting the new ACID features in the next version of Hive, answering a question about whether this would enable OLTP support:

“We’re not aiming at that, it’s not what HIVE is good at, we don’t think it makes any sense, and it would be a total fail if you did that.”

There are other projects for adding transactions to Hadoop, but integration with the corporate world will require support for standards like SQL, and the irony is that it will be a big challenge for Hadoop to support even 22yr-old SQL-92. As analyst Merv Adrian put it in a recent Gartner conference presentation: “What is remarkable is that Hadoop does SQL. Just don’t expect it to do it well”. (More in Gartner’s report: “choosing your SQL access strategy for Hadoop”)

The Architecture of the Future

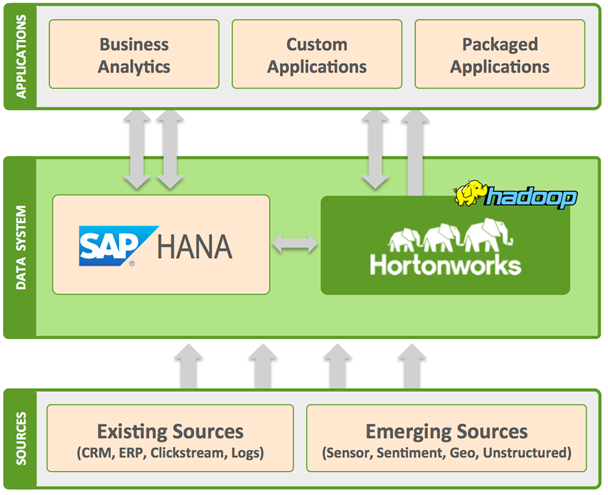

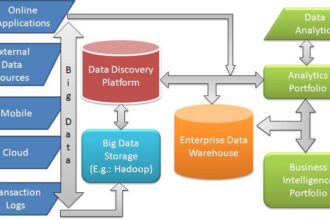

The right answer for the future is the boring, pragmatic one that big data organizations like Hortonworks emphasize in their “modern data architecture” diagram. To make the most of your data, you will need all these proven and new technologies working seamlessly together.

It’s About The Applications of The Future

The real opportunity of new technologies like Hadoop and In-Memory processing is to enable new, more flexible, analytics-focused, actionable applications. And that takes much more than just a platform. Organizations want best-practice business applications capable of analyzing big data and putting it in the hands of people on the front line of your organization that need it, via cloud and mobile devices. And they want a vibrant ecosystem capable of helping the organization make the best use of those applications.

For more information about what that might look like, take a look at SAP’s big data solutions.