In AI-driven applications, complex tasks often require breaking down into multiple subtasks. However, the exact subtasks cannot be predetermined in many real-world scenarios. For instance, in automated code generation, the number of files to be modified and the specific changes needed depend entirely on the given request. Traditional parallelized workflows struggle unpredictably, requiring tasks to be predefined upfront. This rigidity limits the adaptabilityof AI systems.

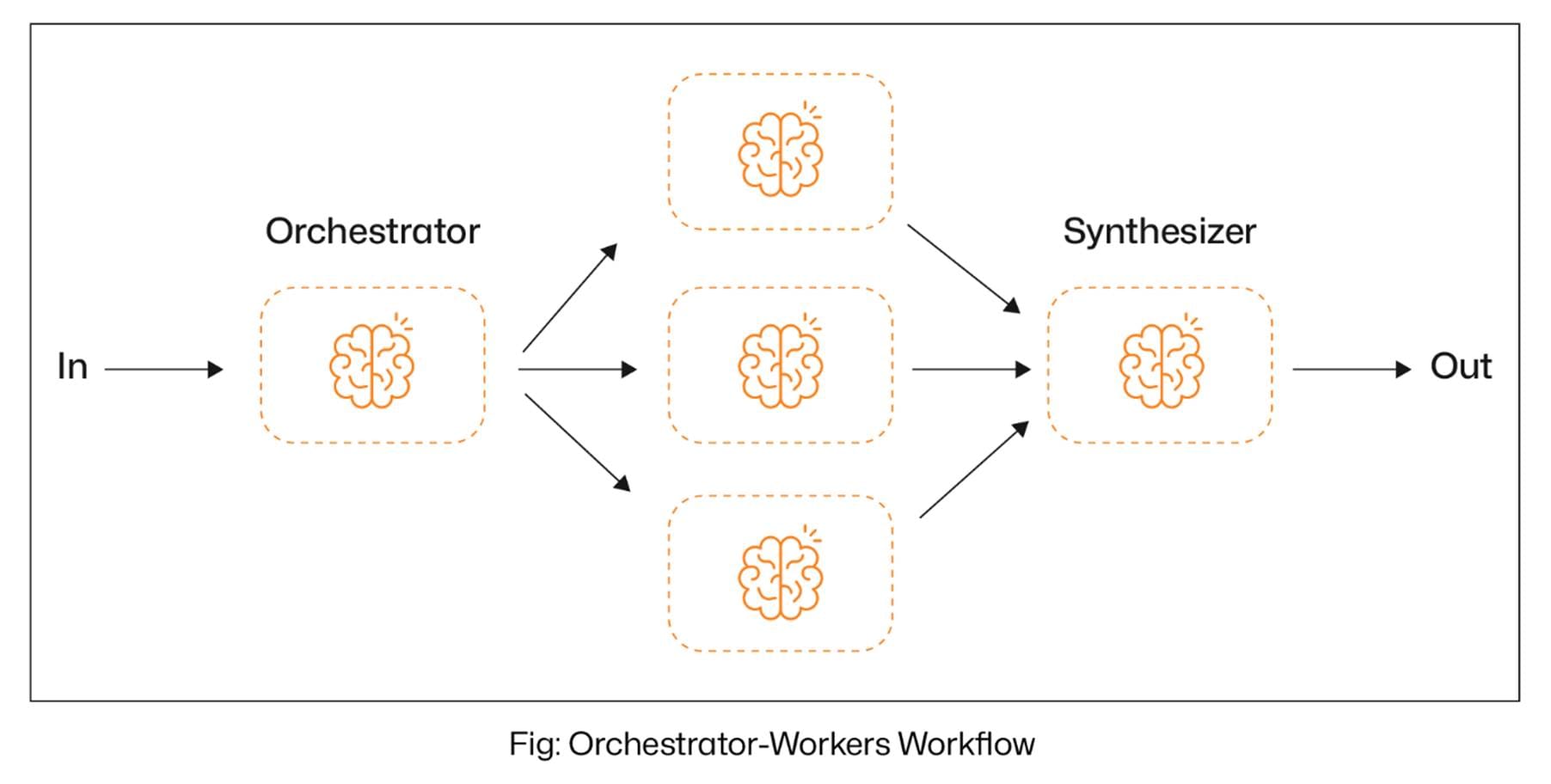

However, the Orchestrator-Workers Workflow Agents in LangGraph introduce a more flexible and intelligent approach to address this challenge. Instead of relying on static task definitions, a central orchestrator LLM dynamically analyses the input, determines the required subtasks, and delegates them to specialized worker LLMs. The orchestrator then collects and synthesizes the outputs, ensuring a cohesive final result. These Gen AI services enable real-time decision-making, adaptive task management, and higher accuracy, ensuring that complex workflows are handled with smarter agility and precision.

With that in mind, let’s dive into what the Orchestrator-Workers Workflow Agent in LangGraph is all about.

Inside LangGraph’s Orchestrator-Workers Agent: Smarter Task Distribution

The Orchestrator-Workers Workflow Agent in LangGraph is designed for dynamic task delegation. In this setup, a central orchestrator LLM analyses the input, breaks it down into smaller subtasks, and assigns them to specialized worker LLMs. Once the worker agents complete their tasks, the orchestrator synthesizes their outputs into a cohesive final result.

The main advantage of using the Orchestrator-Workers workflow agent is:

- Adaptive Task Handling: Subtasks are not predefined but determined dynamically, making the workflow highly flexible.

- Scalability: The orchestrator can efficiently manage and scale multiple worker agents as needed.

- Improved Accuracy: The system ensures more precise and context-aware results by dynamically delegating tasks to specialized workers.

- Optimized Efficiency: Tasks are distributed efficiently, preventing bottlenecks and enabling parallel execution where possible.

Let’s not look at an example. Let’s build an orchestrator-worker workflow agent that uses the user’s input as a blog topic, such as “write a blog on agentic RAG.” The orchestrator analyzes the topic and plans various sections of the blog, including introduction, concepts and definitions, current applications, technological advancements, challenges and limitations, and more. Based on this plan, specialized worker nodes are dynamically assigned to each section to generate content in parallel. Finally, the synthesizer aggregates the outputs from all workers to deliver a cohesive final result.

Importing the necessary libraries.

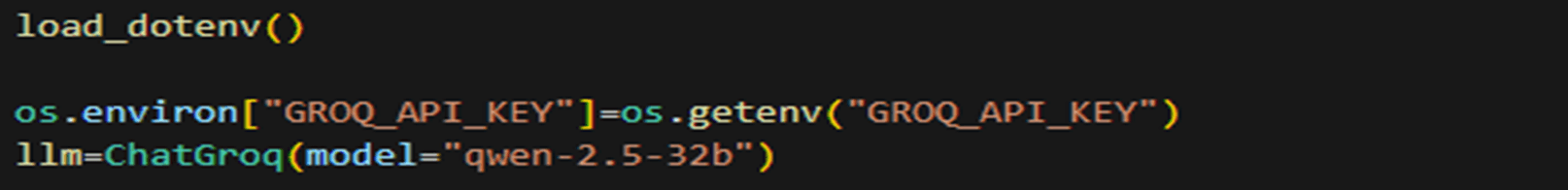

Now we need to load the LLM. For this blog, we will use the qwen2.5-32b model from Groq.

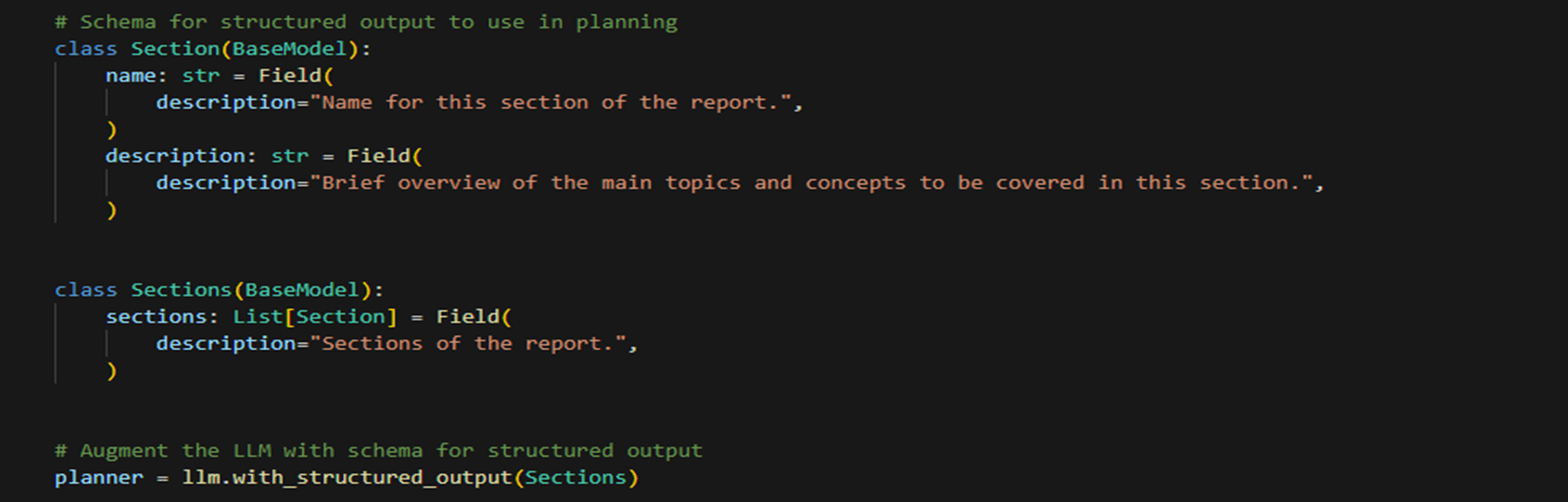

Now, let’s build a Pydantic class to ensure that the LLM produces structured output. In the Pydantic class, we will ensure that the LLM generates a list of sections, each containing the section name and description. These sections will later be given to workers so they can work on each section in parallel.

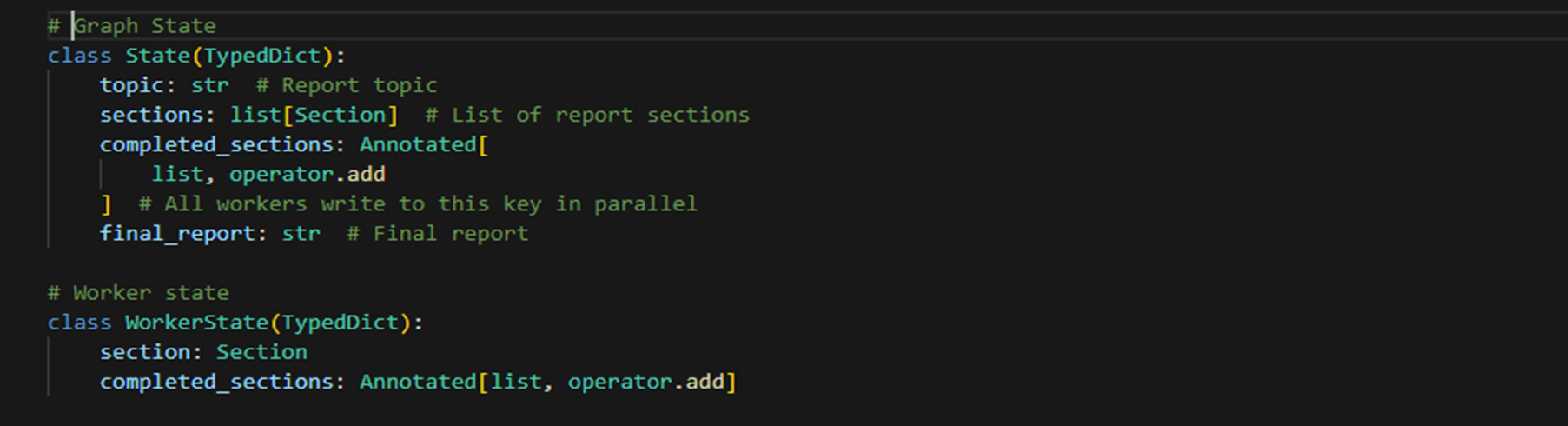

Now, we must create the state classes representing a Graph State containing shared variables. We will define two state classes: one for the entire graph state and one for the worker state.

Now, we can define the nodes—the orchestrator node, the worker node, the synthesizer node, and the conditional node.

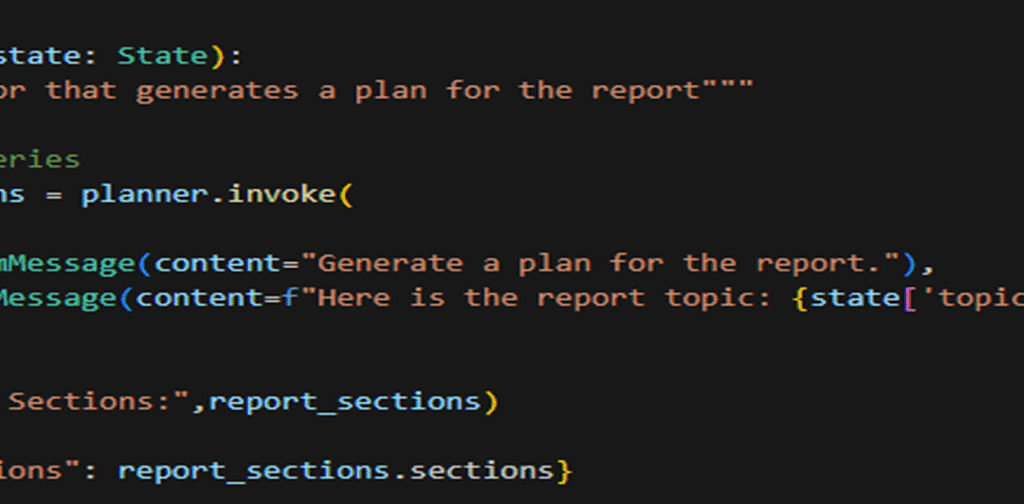

Orchestrator node: This node will be responsible for generating the sections of the blog.

Worker node: This node will be used by workers to generate content for the different sections

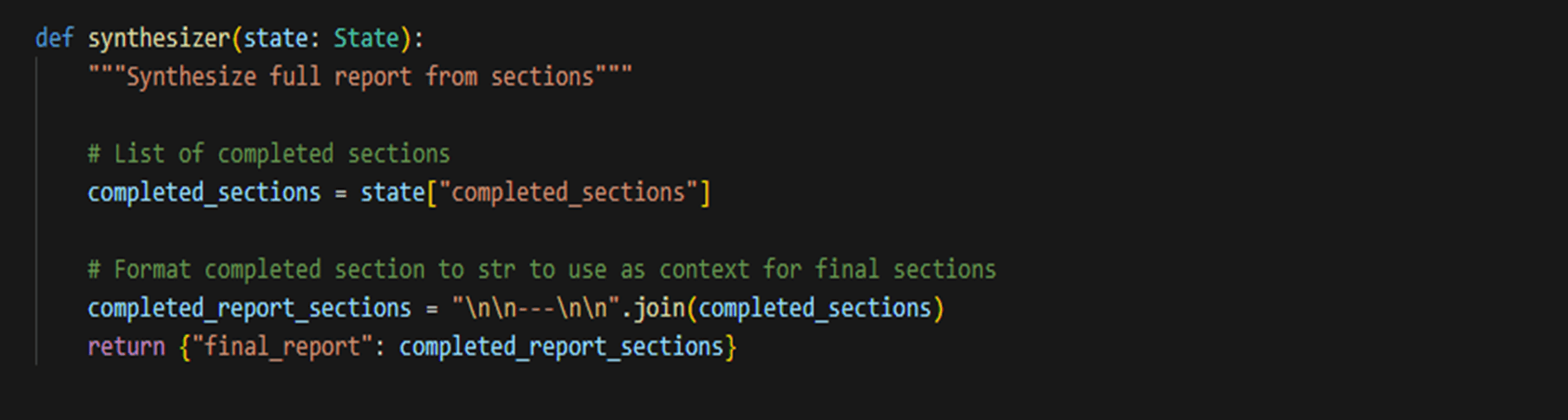

Synthesizer node: This node will take each worker’s output and combine it to generate the final output.

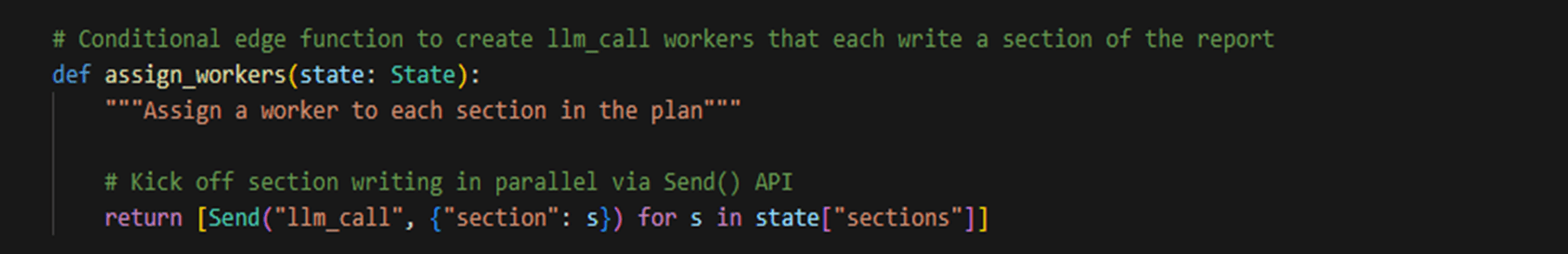

Conditional node to assign worker: This is the conditional node that will be responsible for assigning the different sections of the blog to different workers.

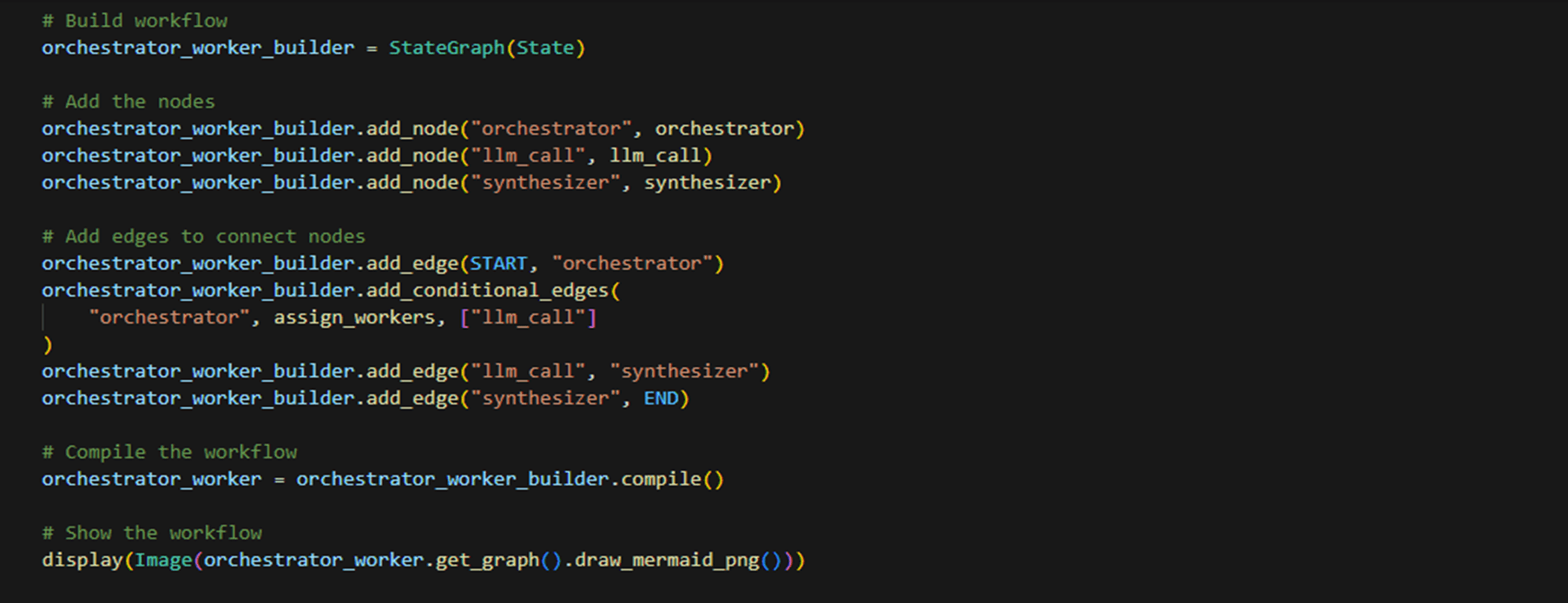

Now, finally, let’s build the graph.

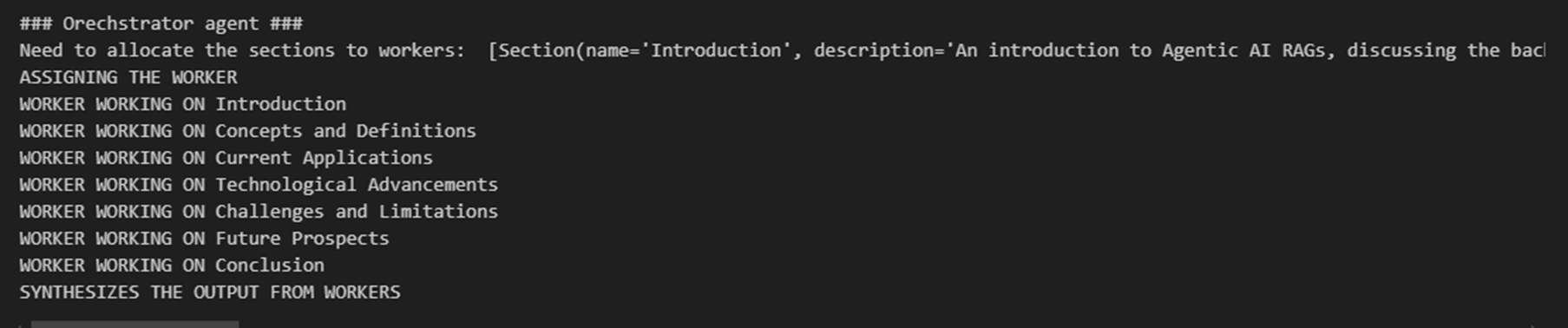

Now, when you invoke the graph with a topic, the orchestrator node breaks it down into sections, the conditional node evaluates the number of sections, and dynamically assigns workers — for example, if there are two sections, then two workers are created. Each worker node then generates content for its assigned section in parallel. Finally, the synthesizer node combines the outputs into a cohesive blog, ensuring an efficient and organized content creation process.

There are other use cases as well, which we can solve using the Orchestrator-worker workflow agent. Some of them are listed below:

- Automated Test Case Generation – Streamlining unit testing by automatically generating code-based test cases.

- Code Quality Assurance – Ensuring consistent code standards by integrating automated test generation into CI/CD pipelines.

- Software Documentation – Generating UML and sequence diagrams for better project documentation and understanding.

- Legacy Code Refactoring – Assisting in modernizing and testing legacy applications by auto-generating test coverage.

- Accelerating Development Cycles – Reducing manual effort in writing tests, allowing developers to focus on feature development.

Orchestrator workers’ workflow agent not only boosts efficiency and accuracy but also enhances code maintainability and collaboration across teams.

Closing Lines

To conclude, the Orchestrator-Worker Workflow Agent in LangGraph represents a forward-thinking and scalable approach to managing complex, unpredictable tasks. By utilizing a central orchestrator to analyze inputs and dynamically break them into subtasks, the system effectively assigns each task to specialized worker nodes that operate in parallel.

A synthesizer node then seamlessly integrates these outputs, ensuring a cohesive final result. Its use of state classes for managing shared variables and a conditional node for dynamically assigning workers ensures optimal scalability and adaptability.

This flexible architecture not only magnifies efficiency and accuracy but also intelligently adapts to varying workloads by allocating resources where they are needed most. In short, its versatile design paves the way for improved automation across diverse applications, ultimately fostering greater collaboration and accelerating development cycles in today’s dynamic technological landscape.