Business analytics, or solving business problems through better use of data, is absolutely nothing new. But every decade or so new technology takes a big leap forward (client/server, web, etc.) and makes previous architectures obsolete. The next big wave of business analytics infrastructure is poised to start arriving this year.

Business analytics, or solving business problems through better use of data, is absolutely nothing new. But every decade or so new technology takes a big leap forward (client/server, web, etc.) and makes previous architectures obsolete. The next big wave of business analytics infrastructure is poised to start arriving this year.

Let’s take a look at an analogy from the history of computing. The first ever computer used for commercial business applications was created in 1951, called “Lyons Electronic Office” (LEO). J. Lyons and Co. was the Starbucks of its day, with high-volume tea rooms open 24 hours a day in key locations across the United Kingdom.

Lyons used LEO to solve a classic business analytics problem that organizations still struggle with today. They created a “Bakery Valuation application” that let the company optimize profitability and minimize waste by calculating exactly how many perishable buns and tea-cakes should be produced for the next day, based on the latest purchase data available. The very first application on the very first commercial computer was already all about business analytics.

LEO was the Exadata of its era – it was the biggest and best data-crunching machine available, with more than double the memory of its nearest rival, the Colossus. Sixty-four 5ft-long mercury tubes, each weighing half a ton, were used to provide a massive 8.75 Kb of memory (i.e. one hundred-thousandth of a today’s entry-level iPhone).

LEO provided breakthrough performance. It could calculate employee pay in 1.5 seconds, replacing skilled clerks that took 8 minutes. But LEO was already a dinosaur, about to be replaced by a completely new technology.

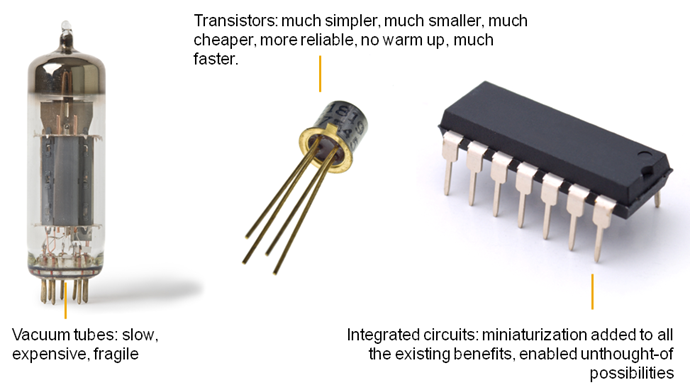

Leo used over 6,000 vacuum tubes to carry out calculations. They worked, but they were complex, large, slow, fragile, expensive, and generated massive amounts of waste heat and noise. Engineers could detect problems simply by listening to the cacophony of buzzes and clicks generated by the machine.

Then a technology breakthrough came along: the transistor. Invented in 1947, they were much simpler, much smaller, much cheaper, more reliable, and much, much faster than vacuum tubes. The first transistor-based computers appeared in 1953, radically changing what was possible with electronics, and rapidly consigned LEO to the dustbin of history.

And transistors were just the start of the revolution. As technology improved and miniaturized, integrated circuits were created to pack millions of transistors onto a single chip, enabling previously unthinkable possibilities (try to imagine a vacuum-powered iPad!).

I believe that we are rapidly moving from the “vacuum tube era” of BI and data warehousing to the “transistor era”. Today’s best-practice BI architectures are rapidly becoming obsolete, and we can already start imagining what the new “integrated circuit” opportunities of the future might look like.

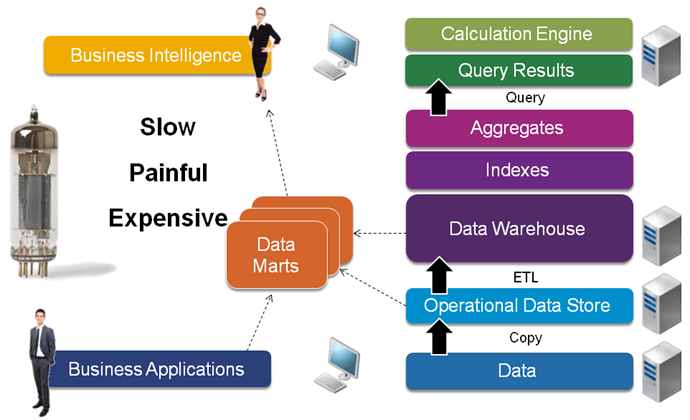

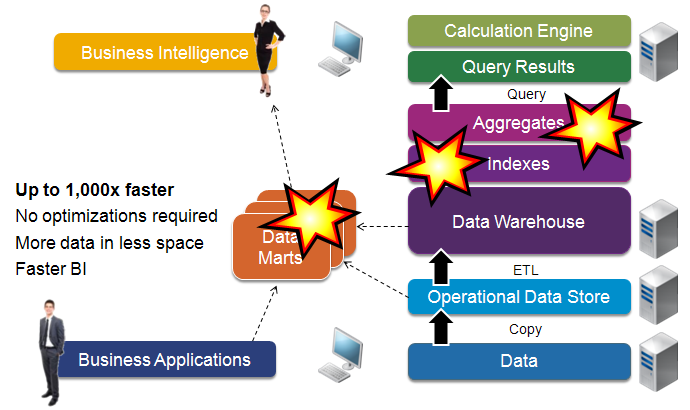

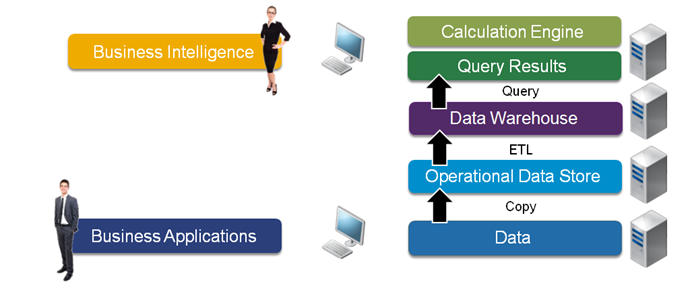

The last decade of “traditional best-practice” BI has been based on the following architecture:

- We start with business applications that gather the data we would like to analyze.

- We can’t do more than basic reporting against this data without slowing down the system, so we create a copy that’s typically called an “operational data store” or ODS.

- The ODS doesn’t store history, we want to analyze data from multiple systems, and the data incompatible or incomplete, so we use ETL (extraction, transformation, and loading) technology to load data into database structures optimized for business intelligence – a data mart or data warehouse.

- Businesses want to store lots of information. To provide acceptable query times, the data warehouse must be optimized by the addition of specialized data structures, database indexes, and aggregate tables – all of which add to the size and complexity of the data warehouse.

- Business intelligence tools are made available to business people to access and display the information they are interested in. To provide better interactivity, an additional data cache is often created for a particular report or cube.

- Because this architecture is slow and unwieldy, organizations often create extra data marts for a particular business need.

The result is a vacuum tube: it works, and it’s the best alternative we have right now, but it’s slow, complex, and expensive.

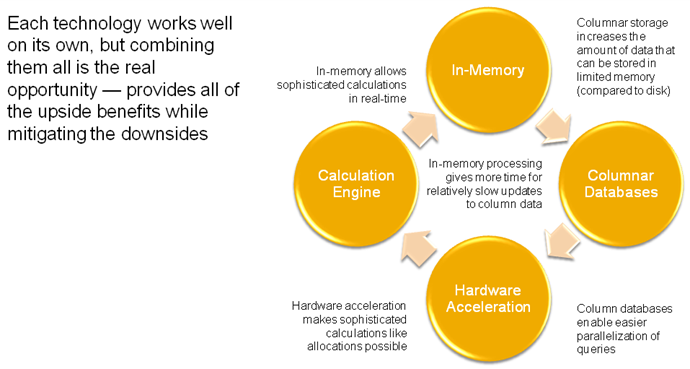

Faced with these frustrations, several technologies have been used over the years to increase business intelligence speed and flexibility. Each has provided valuable progress, but has some downside that prevented it being used on a more general basis. A tipping point has arrived, however, and a combination of these approaches holds out the promise of a radically simpler BI architecture.

The most important is “in-memory processing”. All computer processing has always happened in live memory, but up until now, there have been severe limitations on how much data could be stored, and so all data has first to be retrieved from disk storage before it can be processed.

Over time, memory processing capabilities has expanded exponentially, in line with Moore’s Law, doubling every few years. But disk access speeds have been limited by real-world aerodynamics, and have increased only by 13x or so over the last fifty years. The result has been an ever-widening gulf between the speed of processing data and retrieving it from disk. Today, it can be up to a million times slower to get data from disk than from live memory.

This leads to tough architecture choices. One way of imagining the consequences is to compare it to a chef cooking a meal. If — like on the TV cooking shows — all the ingredients are already prepared and sitting on the counter-top, it’s very quick and easy to create the meal. This is the equivalent of “in-memory processing” once all the required data is available.

This leads to tough architecture choices. One way of imagining the consequences is to compare it to a chef cooking a meal. If — like on the TV cooking shows — all the ingredients are already prepared and sitting on the counter-top, it’s very quick and easy to create the meal. This is the equivalent of “in-memory processing” once all the required data is available.

But imagine now that the chef doesn’t already have the ingredients ready. Given the slow relative speed of disk access, it’s as if the closest supermarket was on the the planet Mars, and the ingredients had to travel months by rocket before each and every meal.

Database vendors have taken every approach possible to increase disk access speeds, for example by predicting what data is most likely to be needed and caching it in advance (the equivalent of pre-stocking a larder in the restaurant, full of the ingredients that are most requested). But the whole point of a data warehouse is to be able to ask any question – the equivalent of being able to order any meal in the restaurant — and so you have to go back to the supermarket on Mars on a regular basis.

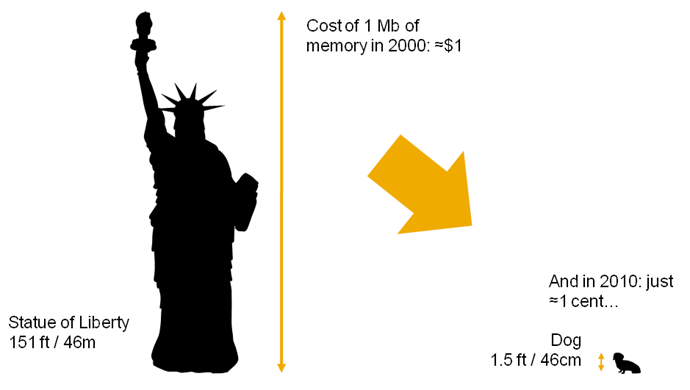

Up until recently, it’s simply been too expensive to store data anywhere other than disk. But the price of memory has plummeted over the last two decades, and 64 bit addressing has radically increased how easy it is to access. Just ten years ago (when we first defined the current BI best practices) the price of one megabyte of live memory was around one dollar. Now it’s over a hundred times less: below one cent, and still falling fast. This is equivalent to something shrinking from the size of the Statue of Liberty down to a Chihuahua: it would be strange indeed if this didn’t have an impact on how we create our BI architectures.

If the whole data warehouse could be stored in-memory, we could make the whole system much faster and much simpler – and eliminate the need for disk-based storage altogether. We’d no longer have need for database optimizations like aggregates and indexes – and eliminating these simplifies the data loading process, and allows us to store more data in that limited, valuable memory space.

But in-memory processing alone only gets you so far – to get the full value of in-memory processing, we want to pack in as much data as possible, and to do that, we can turn to a complementary technology: column data stores.

Today’s relational databases are row-based: each new set of data is written into the next-available memory space. This is fast, which is essential for high-volume transactional applications writing to slow disks. But there are downsides for storing analytic data in a row-based structure, in terms of storage efficiency and query speed.

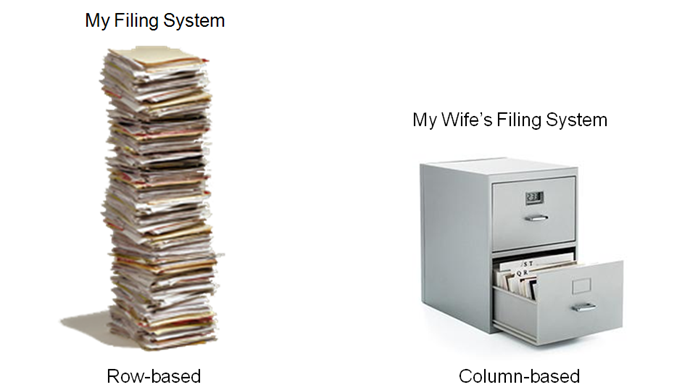

Let’s use an analogy to illustrate the difference between the systems: I employ a “row-based” filing system at home. I open each day’s mail, take a quick look, and then put it on top of a big pile in the corner of my bedroom. At one level, it’s an extremely efficient system: it’s very fast to “write” to the database, and if I want to find all the papers I received on a particular date (a “transaction”), I can find it pretty quickly.

But if I want to do some “analysis”, such as finding my last five bank statements, it’s slow and painful: I have to systematically go through the whole pile (a “full table scan”). I could make things faster by, say, adding yellow post-it notes to the corners of bank statements, so I can go straight to that type of document (a “database index”), but that would create extra work and complicate the system.

My (far more organized) wife uses a “column-based” filing system. When she receives her mail, she takes the time to sort out the documents and allocate them to separate folders. It’s initially slower to store information, but it’s much, much faster when she wants to find all her bank statements.

Column databases store data more efficiently, and allow greater compression, because you store similar types of information together. For example, I get paid the same amount each month, so rather than storing the same pay slip twelve times in the file, I could simply store it once, with a link to each month, and add an exception for my annual bonus. The result is that you can store between ten and a hundred times more data in the same physical space, shrinking the data warehouse back down to a size similar to the raw data used to create it (see diagram below). This in turn reduces the amount of memory you need to scan for each query and increases query processing speed.

Commercial column databases such as Sybase IQ have been around for a long time, and have proved their value, particularly in very high-volume data warehousing environments. But the extra up-front loading time, compared with the slow write-time to disks, has limited their use in the market.

Now let’s imagine combing the two technologies, with an in-memory, column database. Because it’s compact, we can now store the entire data warehouse in memory. And because it’s fast, loading times are no longer a problem. We now have the best of both worlds without the downsides of each: the equivalent of being able to store the whole supermarket in the chef’s kitchen.

But we haven’t finished yet. We can bring three other data warehouse optimization technique into the mix: analytic appliances, in-database algorithms, and incremental loading.

By building a massively-parallel machine specifically for data warehousing, we can again radically increase the speed we can access and manipulate data. In particular, column databases are very well adapted to parallelization: because each column is stored in a separate area of memory, aggregates can be efficiently handed off to a separate processor, or even partitioned across several processors.

By building a massively-parallel machine specifically for data warehousing, we can again radically increase the speed we can access and manipulate data. In particular, column databases are very well adapted to parallelization: because each column is stored in a separate area of memory, aggregates can be efficiently handed off to a separate processor, or even partitioned across several processors.

To go back to the filing analogy: it’s the equivalent of my wife and I both working on our finances. If we had to work off the same pile of “row-based” documents, we’d constantly be in each other’s way. But with separate “column-based” folders, my wife can look through the documents of one bank while I look through another, or we can split the folder in two and each look at one half of them (“partitioning”).

We can also radically improve query speed by doing as much work as possible directly in the database. Today, if you want to do some statistical analysis, you typically have to do a query on the data warehouse, extract a large set of data to a separate statistics tool, create a predictive model, and then apply that to your data.

To take an overly simplistic example: imagine you wanted to rank one million customers by their cumulative revenue. if ranking is available directly in the database engine, you only have to extract one line of data – the result – rather than a million rows.

Of course, by having all the required data in-memory, and with the support of massively parallel processing, we can imagine far more sophisticated operations than just ranking. For example, accurate profitability and costing models can require huge amounts of processing. Being able to do it directly in the in-memory database would take a fraction of the time it typically does today.

Using a separate appliance also allows us to simplify our architecture: we can add a BI server in the same machine, without the need for a separate local calculation engine.

Next, instead of finding and updating data values in our data warehouse, we’ll just incrementally replicate new data from the operational system as soon as it’s available, and add a time-stamp. For each query, we simply ignore any out-of-date data. The loading process becomes much simpler and faster: we can just add new data as it arrives without having to look at what’s already in the data warehouse. This also provides a big auditing and compliance advantage: we can easily recreate the state of the data warehouse at any point in time.

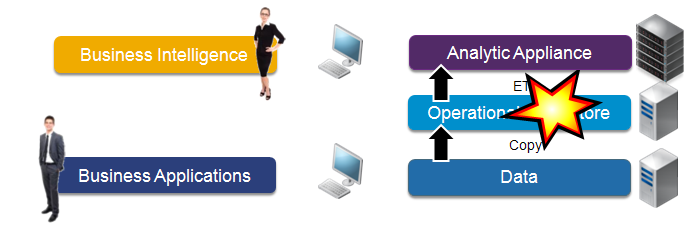

Since we’re only loading new data, and we can do so in real-time, we no longer need an operational data store, and can eliminate it from our architecture.

It’s worth noting at this point the virtuous circle created by these different technology advances working together. Different vendors in the industry typically concentrate on combining one or two approaches. Each provides an improvement, but combining them all is the real opportunity: together, they provide all the upside benefits while mitigating the downsides of each technology – and lead to the real tipping point, where you can realistically give up the disk-based storage.

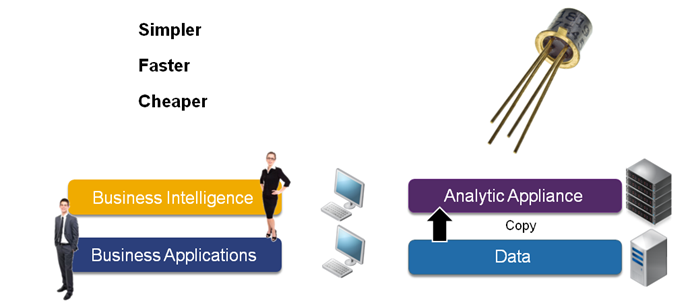

Thanks to in-memory processing, column databases, hardware optimization, in-database calculations and incremental loading, we’re left with the “transistor” version of business analytics. It does the same thing as the previous architecture, but it’s radically simpler, faster and with a more powerful architecture.

This diagram represents the “new best practice” possibilities of the new generation of analytic platforms. But we can go even further, and start thinking about the “transistor” phase of analytics – what new things might be possible in the future?

If we add ACID compliance (a set of properties required for reliable processing of operational data), and the option of using row and/or column storage in the same database, then we could use the same platform for both transactional and analytic processing. Backup storage would be provided by solid-state devices, so you could always recreate your in-memory environment.

This would have several advantages. First, we’ve talked a lot about having a “single source of the truth” in the last decades of business intelligence – if the transactions and the analytics are happening off the same set of data, then we can get a lot closer to our goal.

Second, we get closer to “actionable intelligence”, the ability to provide people people with analytic information as a seamless part of their operational activities, in time to make a difference (e.g. predict that the store will be out of stock tomorrow, and arrange a new delivery, rather than just telling us that the store ran out of stock yesterday).

Third, the new architecture is surprisingly well adapted to real-world business applications, which typically require much more than simple read-and-write operations. Application designers would no longer have to create complex logic above the database layer to do things like budget allocations or supply-chain optimization – these could use the superior analytic power of the analytic engine directly within the new platform.

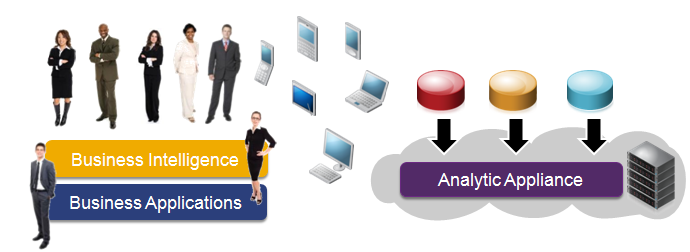

We could then extend this architecture – putting it in the cloud would make mobile business intelligence, extranets, and data collaboration (both inside the organization and across the “business web”) easier and simpler.

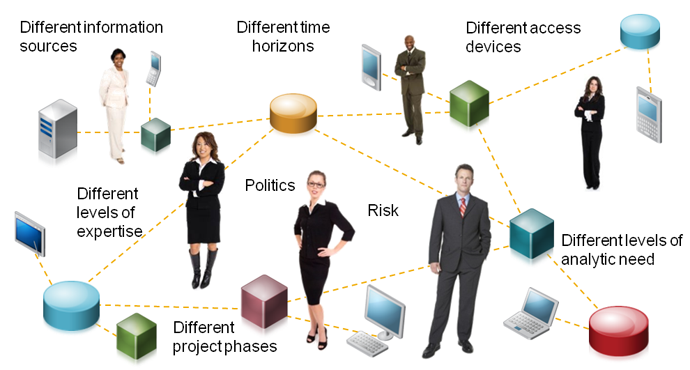

There are also enterprise information management advantages. For example, one common business frustration with BI has been how hard it is to compare corporate data stored in the data warehouse with personal or external data. Today, business people have to extract a large download from the data warehouse, and manually combine it with other data using Excel. This adds extra complexity and work, and leads to information governance issues.

With the new architecture, there’s no longer any need for painful staged uploads to the data warehouse – we can create a “sandbox” area in the analytic platform, let people upload their data in simple row format, and combine and analyze as they wish, using the same, standard, secure corporate infrastructure.

It also turns out that column databases do a good job of storing text data for easy search and retrieval, and other forms of data and algorithms (XML, hadoop) could potentially use the same infrastructure.

There’s one key thing to note at this point: the diagram seems to imply that “data warehousing” is no longer necessary. But nothing could be further from the truth. Reality is, and always will be, messy. The core need to transform, integrate, and model data, across a wide variety of different sources, is as important as ever.

Data integration, metadata, and data quality issues are business problems that don’t magically disappear with a new technical infrastructure. But we can use the power of the in-memory calculations to do enterprise information management in real-time, rather than batch. It becomes more practical to integrate data on the fly (“virtual data warehousing), and we can take the data quality algorithms we use today (fuzzy matching, comparisons to master data, etc.), and execute them as transactions happen. We can store both the raw data, and the “validated” or “corrected” values. This allows us to flexibly decide, at query time, how much reliability we need for a particular analytic need.

In conclusion

The last decade of BI best practice data warehouse architectures is rapidly becoming obsolete, as a combination of existing technologies comes together to provide a tipping point. Every vendor in the market includes some combination of in-memory, column databases, appliances, in-db calculations, map-reduce-type algorithms, and combining operations and analytics in their vision. The obvious conclusion is that the real vision is to use all of these technologies at the same time.

The new analytic platforms won’t magically cure everything that plagues today’s BI projects, but will lead to two big changes:

- It gives us more simplicity and flexibility, to be able to implement business analytics fast, without having to spend most of our time tuning the infrastructure for performance.

- It gives us the power of big data and real real-time performance, combining the best of operations and analytics to create new applications that simply aren’t feasible today.

Other resources:

- Gartner: “Data Warehousing Reaching Its Most Significant Inflection Point Since Its Inception”

- IDC: “What do a cluster of corporate events tell us about the specialty data warehousing market?”

- Merv Adrian and Colin White: “Analytic Platforms: Beyond the Traditional Data Warehouse”

- Hasso Platner: “A common database approach for OLTP and OLAP using an in-memory database” and book: “In-Memory Data Management: An Inflection Point for Enterprise Applications”