This article completes the three-part series which started with Using historical data to justify BI investments – Part I and continued (somewhat inevitably) with Using historical data to justify BI investments – Part II. Having presented a worked example, which focused on using historical data both to develop a profit-enhancing rule and then to test its efficacy, this final section considers the implications for justifying Business Intelligence / Data Warehouse programmes and touches on some more general issues.

The Business Intelligence angle

In my experience when talking to people about the example I have just shared, there can be an initial “so what?” reaction. It can maybe seem that we have simply adopted the all-too-frequently-employed business ruse of accentuating the good and down-playing the bad. Who has not heard colleagues say “this was a great month excluding the impact of X, Y and Z”? Of course the implication is that when you include X, Y and Z, it would probably be a much less great month; but this is not what we have done.

One goal of business intelligence is to help in estimating what is likely to happen in the future and guiding users in taking decisions today that will influence this. What we have really done in the above example is as follows:

![Look out Morlocks, here I come... [alumni of Imperial College London are so creative aren't they?] Look out Morlocks, here I come... [alumni of Imperial College London are so creative aren't they?]](http://peterthomas.files.wordpress.com/2011/05/the-time-machine.jpg?w=400&h=272)

- shift “now” back two years in time

- pretend we know nothing about what has happened in these most recent two years

- develop a predictive rule based solely on the three years preceding our back-shifted “now”

- then use the most recent two years (the ones we have metaphorically been covering with our hand) to see whether our proposed rule would have been efficacious

For the avoidance of doubt, in the previously attached example, the losses incurred in 2009 – 2010 have absolutely no influence on the rule we adopt, this is based solely on 2006 – 2008 losses. All the 2009 – 2010 losses are used for is to validate our rule.

We have therefore achieved two things:

- Established that better decisions could have been taken historically at the juncture of 2008 and 2009

- Devised a rule that would have been more effective and displayed at least some indication that this could work going forward in 2011 and beyond

From a Business Intelligence / Data Warehousing perspective, the general pitch is then something like:

- if we can mechanically take such decisions, based on a very non-sophisticated analysis of data, then if we make even simple information available to the humans taking decisions (i.e. basic BI), then surely the quality of their decision-making will improve

- If we go beyond this to provide more sophisticated analyses (e.g. including industry segmentation, analysis of insured attributes, specific products sold etc., i.e. regular BI) then we can – by extrapolation from the example – better shape the evolution of the performance of whole books of business

- We can also monitor the decisions taken to determine the relative effectiveness of individuals and teams and compare these to their peers – ideally these comparisons would also be made available to the individuals and teams themselves, allowing them to assess their relative performance (again regular BI)

- Finally, we can also use more sophisticated approaches, such as statistical modelling to tease out trends and artefacts that would not be easily apparent when using a standard numeric or graphical approach (i.e. sophisticated BI, though others might use the terms “data mining”, “pattern recognition” or the now ubiquitous marketing term “analytics”)

The example also says something else – although we may already have reporting tools, analysis capabilities and even people dabbling in statistical modelling, it appears that there is room for improvement in our approach. The 2009 – 2010 loss ratio was 54% and it could have been closer to 40%. Thus what we are doing now is demonstrably not as good as it could be and the monetary value of making a stepped change in information capabilities can be estimated.

In the example, we are talking about £1m of biannual premium and £88k of increased profit. What would be the impact of better information on an annual book of £1bn premium? Assuming a linear relationship and using some advanced Mathematics, we might suggest £44m. What is more, these gains would not be one-off, but repeatable every year. Even if we moderate our projected payback to a more conservative figure, our exercise implies that we would be not out of line to suggest say an ongoing annual payback of £10m. These are numbers and concepts which are likely to resonate with Executive decision-makers.

To put it even more directly an increase of £10m a year in profits would quickly swamp the cost of a BI/DW programme in very substantial benefits. These are payback ratios that most IT managers can only dream of.

| As an aside, it may have occurred to readers that the mechanistic rule is actually rather good and – if so – why exactly do we need the underwriters? Taking to one side examples of solely rule-based decision-making going somewhat awry (LTCM anyone?) the human angle is often necessary in messy things like business acquisition and maintaining relationships. Maybe because of this, very few insurance organisations are relying on rules to take all decisions. However it is increasingly common for rules to play some role in their overall approach. This is likely to take the form of triage of some sort. For example:

In this way process efficiencies are gained. Staff time is only applied where it is necessary and the most expensive resources are applied to those cases that most merit their abilities. |

Correlation

Let’s pause for a moment and consider the Insurance example a little more closely. What has actually happened? Well we seem to have established that performance of policies in 2006 – 2008 is at least a reasonable predictor of performance of the same policies in 2009 – 2010. Taking the mutual fund vendors’ constant reminder that past performance does not indicate future performance to one side, what does this actually mean?

What we have done is to establish a loose correlation between 2006 – 2008 and 2009 – 2010 loss ratios. But I also mentioned a while back that I had fabricated the figures, so how does that work? In the same section, I also said that the figures contained an intentional bias. I didn’t adjust my figures to make the year-on-year comparison work out. However, at the policy level, I was guilty of making the numbers look like the type of results that I have seen with real policies (albeit of a specific type). Hopefully I was reasonably realistic about this. If every policy that was bad in 2006 – 2008 continued in exactly the same vein in 2009 – 2010 (and vice versa) then my good segment would have dropped from an overall loss ratio of 54% to considerably more than 40%. The actual distribution of losses is representative of real Insurance portfolios that I have analysed. It is worth noting that only a small bias towards policies that start bad continuing to be bad is enough for our rule to work and profits to be improved. Close scrutiny of the list of policies will reveal that I intentionally introduced several counter-examples to our rule; good business going bad and vice versa. This is just as it would be in a real book of business.

Rather than continuing to justify my methodology, I’ll make two statements:

- I have carried out the above sort of analysis on multiple books of Insurance business and come up with comparable results; sometimes the implied benefit is greater, sometimes it is less, but it has been there without exception (of course statistics being what it is, if I did the analysis frequently enough I would find just such an exception!).

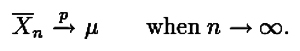

- More mathematically speaking, the actual figure for the correlation between the two sets of years is a less than stellar 0.44. Of course a figure of 1 (or indeed -1) would imply total correlation, and one of 0 would imply a complete lack of correlation, so I am not working with doctored figures. Even a very mild correlation in data sets (one much less than the threshold for establishing statistical dependence) can still yield a significant impact on profit.

Closing thoughts

Having gone into a lot of detail over the course of these three articles, I wanted to step back and assess what we have covered. Although the worked-example was drawn from my experience in Insurance, there are some generic learnings to be made.

Broadly I hope that I have shown that – at least in Insurance, but I would argue with wider applicability – it is possible to use the past to infer what actions we should take in the future. By a slight tweak of timeframes, we can even take some steps to validate approaches suggested by our information. It is important that we remember that the type of basic analysis I have carried out is not guaranteed to work. The same can be said of the most advanced statistical models; both will give you some indication of what may happen and how likely this is to occur, but neither of them is foolproof. However, either of these approaches has more chance of being valuable than, for example, solely applying instinct, or making decisions at random.

In Patterns, patterns everywhere, I wrote about the dangers associated with making predictions about events are essentially unpredictable. This is another caveat to be born in mind. However, to balance this it is worth reiterating that even partial correlation can lead to establishing rules (or more sophisticated models) that can have a very positive impact.

While any approach based on analysis or statistics will have challenges and need careful treatment, I hope that my example shows that the option of doing nothing, of continuing to do things how they have been done before, is often fraught with even more problems. In the case of Insurance at least – and I suspect in many other industries – the risks associated with using historical data to make predictions about the future are, in my opinion, outweighed by the risks of not doing this; on average of course!

Filed under: business analytics, business intelligence, data warehousing Tagged: bi benefits, correlation, insurance, predictive modelling, xkcd.com

![]()