How much data gets processed every day? How much data do we generate every year?

How much data gets processed every day? How much data do we generate every year?

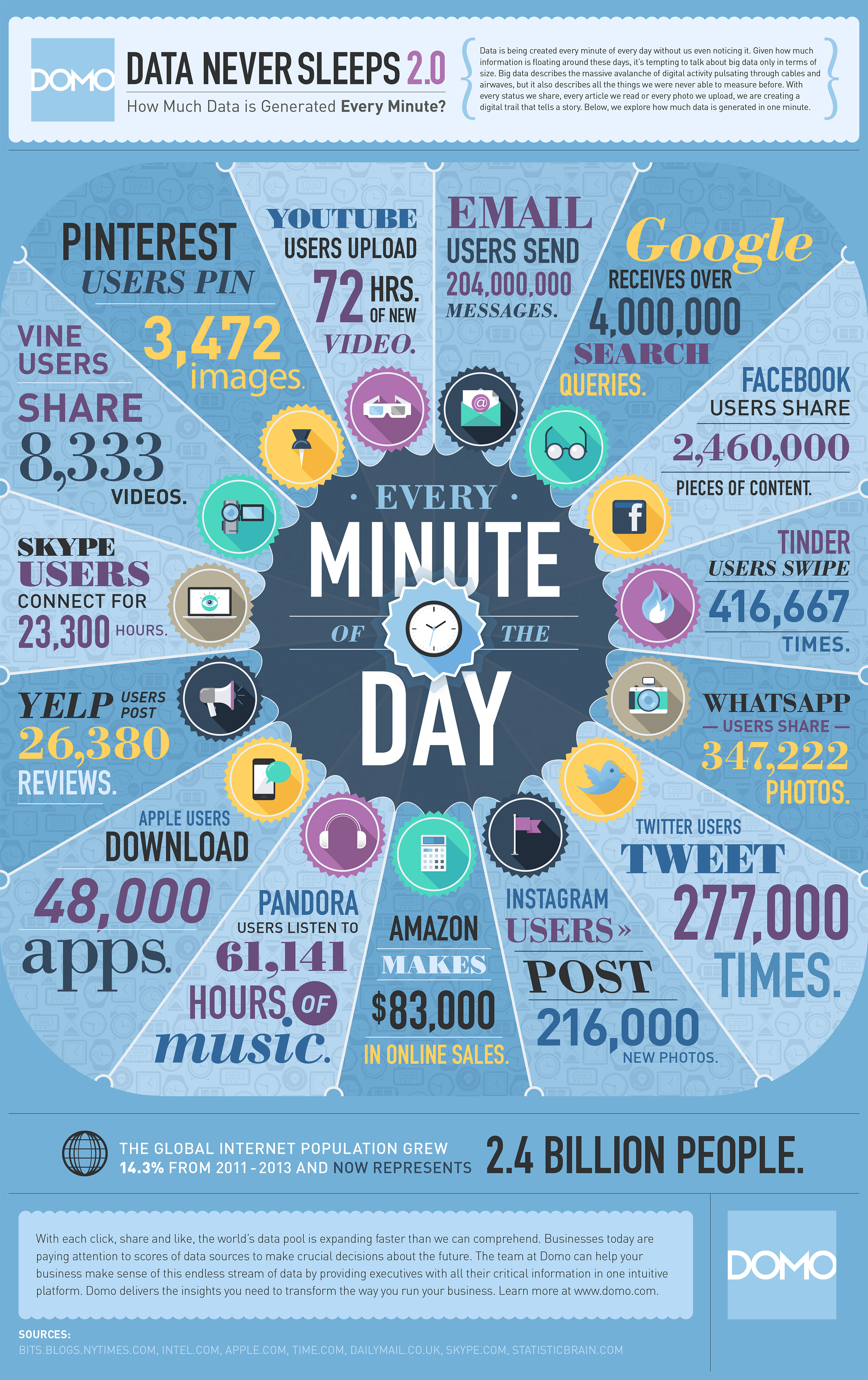

That number changes so fast, doubling every year or two, that we can only go with best estimates from informed sources. These sources, most of which are companies that are luminaries in the field of data management, estimate a figure so high it’s almost impossible to conceive of what it means. IDC, the global market firm, estimated that in 2011 we created 1.8 zettabytes – or 1.8 trillion gigabytes – of information. In 2012, they go on to say, we created 2.8 zettabytes. Further, they say that by 2020, we will generate 40 zettabytes a year.

IBM estimates that we create 2.5 (1018) quintillion bytes of data each day.

This massive collection of data is known as Big Data. Big Data is a term that’s become very popular to represent datasets that are described in the petabytes and exabytes, and which is sometimes applied to the set of technologies and applications that deal with it.

For the purposes of this article, we will restrict the definition to one that describes a massive amount of data. In the proceedings from the AIP conference in September 2014, Andrea De Mauro, Marco Greco and Michele Grimaldi gave us a more specific and robust definition: “Big Data represents the Information assets characterized by such a High Volume, Velocity and Variety to require specific Technology and Analytical Methods for its transformation into Value”.

It is important to take note, in this definition, that it is not only the amount, or Volume of the data, but the speed – Velocity – at which it is served and consumed. Streaming has changed our perception of the storage and delivery of data and placed previously unimaginable demands on the infrastructure and application engines for doing so.

An even more recent enhancement to the definition in Wikipedia took the phrase “Volume, Velocity and Variety” and added to it two additional “V” concepts, both of which are extremely relevant to the challenges facing Big Data: Variability and Veracity.

Data Collection and its Uses

The ability to collect massive amounts of data has grown exponentially with the advent of the Internet and the number of people who use it. Data collection happens almost as a side effect of other computing activities. Data is submitted intentionally in the process of creating accounts, uploading files, and other overt actions we take. However, data is also collected by unintentional submission, as a by-product of some other activity. A seemingly private action, like clicking on a link, can provide a piece of information that is valuable to a marketer; and therefore that data is being recorded. And stored. And, somewhere, processed.

Because computers are excellent holders and passers of information, it wasn’t long before marketers and other industries realized the potential of retaining the vast amount of data available for collection as it was passed around on networks, most notably the Internet (although it is important to remember how much Big Data exists on a company’s intranet). When that data is paired with other data, for instance, demographic information, a person’s YouTube preferences, location, social behavior, and outlook, this data becomes all the more powerful.

Other industries also realized they were able to collect and process information on a scale never seen before. Not only the Internet, but also the internal networks of private companies and other entities can hold a vast amount of information. Governments in the U.S., India and other places have been able to run finer-tuned campaigns to win elections. International organizations collect and use data on healthcare, productivity, and employment to help them understand where development dollars are best spent. The private sector uses big data in many ways, including transaction data and analysis. Science and research are involved with Big Data to analyze, for instance, the activity of the Large Hadron Collider, and of data returned by very large telescope arrays (VLT). In addition, Big Data has changed the face of manufacturing, by providing enough data on production, demand, and availability, for analysts to understand what drives shortages and overstocks, behaviors previously difficult to identify and plan.

Recording zettabytes of data is one thing; data collection is easy and cheap. Data collection happens when you think you’re doing something else. How we make use of it is an entirely different matter. One of the greatest challenges facing any organization, from marketing to government, is how to use such a massive amount of data effectively.

De Mauro, et al., described Variety as being one of the key characteristics of Big Data. Data sources are everywhere, and collect information of all kinds, some of which should be considered sensitive, and handled securely. Along with the Variety comes Variability, meaning that with data coming from so many different sources, the format and accessibility, even of the same information, can be wildly different. And finally, the dependability of the data – its Veracity – is something that data analysts must keep front and center. “Dirty data” has always been a problem for database administration, but the problem multiplies exponentially in today’s environment, with high volume and multiple sources contributing data.

When it is successfully analyzed, Big Data can help scientists decode DNA, it can help governments predict terrorist activity, it can adjust your company’s offerings to your customers’ needs.

But the question facing the gatherers and holders of such data is how do we make use of it? Increasingly, how do we keep it safe?

Challenges and Security

Breaches in security have always been serious, but Big Data security breaches can be disastrous. Data collection can include extremely sensitive and extremely personal information. There is potential for identity theft and malicious manipulation of data. As companies develop their storage and analytics systems for Big Data, security will have to be at the top of their priority list.

Data Analytics Systems The first major challenge facing big data is the simple fact that systems and processes were not built to handle the amount of data that we now expect to process on a regular basis. The storage infrastructure is relatively easy to create: storage has become cheap and available, and its challenges are fairly well understood. Tools to analyze and use the data are being developed now, and high-need companies have built their own in-house data analytics: in 2014, Google processed about 20 petabytes per day.

New technologies present open-source opportunities. It is important that if your data analytics system is open-source, that you are able to identify security holes and seal them off.

Multi-Tiered Storage Traditional storage models used architecture such as multi-tiered storage for data such as transaction records, where the lower-ranked data is stored farther away, in digital terms, and on different media, from the higher-ranked data. However, with the huge amount of data that is now collected, much of the value comes from being able to match up data to identify inconsistencies or patterns in the data, and that is more difficult to do when the data resides on different media and at different RAID levels.

Categories for these tiers may be based on levels of protection needed, requirements for speed of access, or frequency of use, as examples. Assigning data to particular tiers, and thus to particular media, may be an ongoing and highly complex activity. In smaller datasets, an individual might manually move the data, but that isn’t practical with datasets of this size. Some vendors provide software for automatically managing the process based on a company-defined policy. Automated storage tiering (AST) is performed by an application that dynamically moves information between storage tiers. In the past, AST did not keep track of where the data is stored, but now some systems store metadata about every block. This makes automation easier, while adding to the amount of data that needs to be managed.

Data Validity/Normalization Another example of a data management issue that clearly becomes a security issue starts with the ability to validate one’s data. In addition to the fact that data comes from so many sources and in so many formats, there is also the problem of malicious manipulation of data. The challenge here is to feel secure about the dataset itself.

In cloud computing, and in general, where computing involves the storage, access, and use of so much data, distributed computing architecture is generally used. In this model, many computers perform the same task on different subsets of the dataset. Those tasks, typically, involve mapping the data to appropriate fields in the database, maintaining connections between them, and so on.

An untrusted computer may do the mapping, or the data itself might be wrong; these results can be unintentional or malicious. In either case, data analytics will extrapolate a wrong result, seriously compromising the value of gathering the data to begin with. Mapping data from different sources presents validation challenges even without malicious interference, and even with smaller datasets; multiply this problem by the number of sources and the volume of information having to be organized. Data in a very large database drawing heavily from the Internet include sources such as sales, registrations, customer service centers, mail rooms, social media, and so on.

Non-relational Databases The variability of data presents its own set of problems. It has been said that 90% of data collected in Big Datasets comes in as unstructured data. In order to deal with this, the best answer is often to move the data to a non-relational database (NRDB). Non-relational databases were originally built to address a problem or challenge within data computing. They were generally built as part of a larger framework where security was usually applied to the middleware. Security was never a goal for the NRDB in itself. But NRDBs are seeing increased use by companies with large unstructured datasets, and security will have to be considered more thoroughly.

Input validation. Again, the variety of sources that feed Big Data are many and varied. Besides the errors inherent in identifying and mapping data correctly, rogue sensors and source spoofing can be used to manipulate data.

Privacy Risk. Even information stored in different places by different companies can be correlated to identify people and associate them with their personal information. The safety of personal information can be compromised in many ways, and it is imperative that a company that collects data ensures its customers’ information is untouchable.

Monitoring. Real-time security monitoring is available, but reports show that it can generate too many false alerts for a human to cope with. In datasets of these sizes, there will be many inconsistencies and abnormal activity, so it becomes unwieldy to try to monitor all activity in real time. A company can boost its security by requiring frequent, granular audits, which, if they are not real-time, are at least frequent enough to catch a breach in its earliest stages.

Controlled Access. Companies managing this much data must be extremely vigilant about who can access their data and how much. Access should be cryptographically secure, and access should be assigned at the most granular level, always keeping “need to know” as the qualifier for access.

“Value” is another “V” we can add to our list of V’s that describe Big Data. There is much Value to be found in good use of large quantities of data. But you must be Vigilant to meet the security needs of your High Volume, High-Velocity, and Varied data.

Feature image is designed by Freepik.